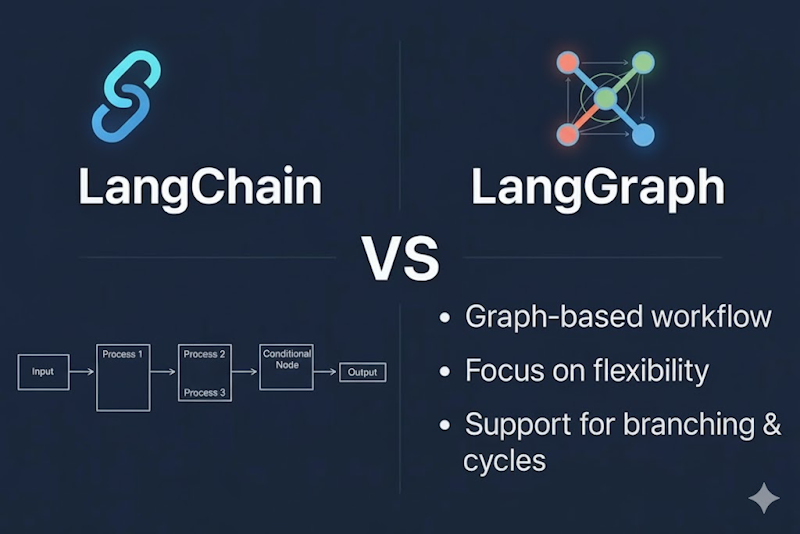

If you’ve been building AI applications with large language models or LLMs, you’ve probably encountered LangChain just as I did and everyone else does—the popular framework that has become such an important part of Agentic AI development since its launch in 2022. But as you may have already realised, there’s a new player in the game: LangGraph, launched in early 2024 by the same team behind LangChain.

We are going to discuss and compare LangChain and LangGraph in this post and see which one is a better fit for different real-world situations. While these frameworks share DNA, they solve fundamentally different problems. In this comprehensive guide, we’ll explore what makes LangGraph unique, how it differs from LangChain, and most importantly, which one you should choose for your next AI project.

The Evolution: From Chains to Graphs

To understand LangGraph, we first need to understand what LangChain couldn’t do. Please feel free to refer to my earlier post about LangChain if you are not yet fully familiar with that framework or would like to refresh your understanding before moving forward with this post.

LangChain: The Linear Orchestrator

LangChain revolutionized how developers build LLM applications by providing modular building blocks that could be “chained” together. Think of it as a conveyor belt where data flows from one step to the next in a predictable sequence:

- Retrieve data from a database

- Summarize the content

- Answer the user’s question

This linear, Directed Acyclic Graph (DAG) approach works brilliantly for straightforward workflows where each step follows the previous one. LangChain excels at:

- Document processing pipelines

- Question-answering systems

- Content generation workflows

- Simple chatbots with clear conversation paths

LangChain is reactive. It moves in the flow of execution in a linear fashion based on the output of the previous step for the most part. But what happens when your AI application needs to:

- Loop back and retry failed operations?

- Make dynamic decisions based on runtime conditions?

- Maintain context across multiple sessions?

- Coordinate multiple AI agents working together to do part of a larger task?

This is where LangChain’s linear architecture starts to show its limitations—and where LangGraph enters the scene.

What is LangGraph?

LangGraph is a stateful orchestration framework built on top of LangChain for creating complex, non-linear AI workflows. Instead of thinking in chains, LangGraph models applications as directed graphs where:

- Nodes represent actions (calling an LLM, using a tool, making a decision)

- Edges define the flow between nodes (including conditional and cyclical paths)

- State is a first-class citizen that persists across the entire workflow

Launched in early 2024, LangGraph quickly became the go-to framework for building production-ready AI agents. Companies like LinkedIn, Replit, Elastic, and Uber have successfully deployed LangGraph-based systems that handle millions of interactions.

The Core Philosophy

LangGraph was designed with lessons learned from LangChain’s real-world deployments. The team identified three critical needs that weren’t well-served by linear chains:

- Cycles and Loops: Real AI agents need to iterate, retry, and refine their approach

- State Management: Complex applications need to remember context across steps and sessions

- Human-in-the-Loop: Production systems need human oversight at critical decision points

LangChain vs LangGraph — Key Differences

A concise comparison focused on architecture, state, control flow, use cases, and learning curve.

LangChain vs LangGraph — Key Differences

A concise comparison focused on architecture, state, control flow, use cases, and learning curve.

| Aspect | LangChain | LangGraph |

|---|---|---|

| Workflow Architecture |

Linear Chains (DAG)

|

Cyclical Graphs

|

| State Management |

Implicit State

|

Explicit State

|

| Control Flow |

Sequential

# LangChain example

chain = (

retrieval_chain

| summarization_chain

| answer_chain

)

result = chain.invoke(query)

|

Graph-Based

# LangGraph example

graph = StateGraph(AgentState)

graph.add_node("retrieve", retrieve_data)

graph.add_node("summarize", summarize_data)

graph.add_node("answer", generate_answer)

graph.add_conditional_edges(

"retrieve",

should_retry,

{"retry": "retrieve", "continue": "summarize"}

)

|

| Use Case Focus |

Rapid Prototyping

|

Production Agents

|

| Learning Curve |

Beginner-Friendly

|

Advanced but Powerful

|

Tip

Use LangChain for quick prototypes and single-pass LLM workflows. Choose LangGraph when you need persistent state, loops, or production-grade agent orchestration.

LangGraph’s Powerful Features

Built-in State Persistence

LangGraph automatically manages state persistence, meaning your agent can:

- Pause and resume from the exact point it stopped

- Survive crashes and recover gracefully

- Maintain context across days or weeks

- Support “time travel” debugging

Human-in-the-Loop Workflows

Production AI systems need human oversight. LangGraph makes it easy to:

- Pause execution for human approval

- Allow humans to modify the state before continuing

- Implement approval workflows at critical decision points

- Create collaborative human-AI systems

Multi-Agent Orchestration

LangGraph excels at coordinating multiple AI agents:

- Collaborative: Agents share a common workspace and see each other’s work

- Hierarchical: A supervisor agent coordinates specialist sub-agents

- Sequential: Agents hand off work in a specific order

- Parallel: Multiple agents work simultaneously on different aspects

Streaming and Real-Time Updates

Unlike batch processing, LangGraph supports:

- Token-by-token streaming from LLMs

- Intermediate step streaming

- Real-time state updates

- Live progress monitoring

When to Choose LangChain

Choose LangChain when you need:

✅ Quick Prototyping

- Building MVPs or proof-of-concepts

- Testing ideas before committing to complex architectures

- Rapid iteration and experimentation

- Time-constrained projects

✅ Simple, Linear Workflows

- Document summarization

- Text classification

- Single-pass question answering

- Content generation

- Data extraction from documents

✅ Straightforward Applications

- Basic chatbots with predefined conversation flows

- Translation services

- Simple data transformation pipelines

- Report generation

✅ Learning LLM Development

- First projects with large language models

- Understanding prompt engineering basics

- Exploring different LLM capabilities

- Building foundational knowledge

Example Use Cases:

- A document summarization tool that extracts key points from PDFs

- A content generator for blog posts based on topics

- A simple FAQ chatbot with predefined responses

- An email classifier that categorizes incoming messages

When to Choose LangGraph

Choose LangGraph when you need:

✅ Complex Agent Systems

- Multi-step reasoning processes

- Autonomous decision-making

- Tool-using agents that need to iterate

- Self-correcting workflows

✅ Stateful Applications

- Conversational agents with long-term memory

- Applications that span multiple sessions

- Context-aware personal assistants

- Progress-tracking task managers

✅ Non-Linear Workflows

- Conditional branching based on runtime data

- Loops and retries for error recovery

- Dynamic routing between different paths

- Workflows that revisit previous steps

✅ Multi-Agent Coordination

- Systems with specialized AI agents

- Collaborative problem-solving

- Hierarchical agent structures

- Agent swarms or clusters

✅ Production-Grade Reliability

- Enterprise applications with real users

- Systems requiring robust error handling

- Applications needing observability and monitoring

- Long-running background processes

Example Use Cases:

- LinkedIn’s AI recruiting agent that automates hiring workflows

- Replit’s coding assistant that helps millions of users debug and write code

- Elastic’s AI assistant that evolved from LangChain to LangGraph

- SQL Bot that transforms natural language into database queries with error correction

- Customer support copilots that remember context across conversations

Real-World Success Stories

LinkedIn: SQL Bot

LinkedIn deployed an internal AI assistant called SQL Bot that transforms natural language questions into SQL queries. Built on LangGraph, it:

- Finds the right database tables

- Writes and executes queries

- Fixes errors automatically

- Enables non-technical employees to access data insights

Replit: AI Coding Agent

Replit’s agent, built with LangGraph, serves millions of users by:

- Understanding coding requests

- Writing code across multiple files

- Testing and debugging automatically

- Iterating based on results

Uber: Code Migration at Scale

Uber’s Developer Platform AI team uses LangGraph for:

- Large-scale codebase migrations

- Automated refactoring

- Intelligent code analysis

- Custom workflow orchestration

The Best of Both Worlds

Here’s an important insight: It’s not LangChain vs LangGraph—it’s LangChain AND LangGraph.

These frameworks are designed to work together:

- Use LangChain components within LangGraph

- Leverage LangChain’s 700+ integrations

- Use chains, tools, and prompts as LangGraph nodes

- Build on existing LangChain expertise

- Start with LangChain, evolve to LangGraph

- Prototype quickly with LangChain

- Migrate to LangGraph when complexity grows

- Reuse your LangChain components

- Mix and match as needed

- Use LangChain for simple subsystems

- Use LangGraph for complex orchestration

- Choose the right tool for each part of your application

Decision Framework: A Practical Guide

Ask yourself these questions:

Question 1: Does your workflow need loops or retries?

- No → Consider LangChain

- Yes → Lean toward LangGraph

Question 2: Do you need to maintain context across sessions?

- No → LangChain is sufficient

- Yes → LangGraph provides better state management

Question 3: Will multiple AI agents need to coordinate?

- No → LangChain works well

- Yes → LangGraph is purpose-built for this

Question 4: Is this a production system with real users?

- No (prototype/demo) → LangChain is faster to build

- Yes (production) → LangGraph offers better reliability

Question 5: Does the workflow have conditional branching?

- Simple branches → LangChain can handle it

- Complex decision trees → LangGraph is more maintainable

Question 6: What’s your team’s experience level?

- New to LLM development → Start with LangChain

- Experienced, building complex systems → LangGraph provides more power

The Future of LangChain and LangGraph

Both frameworks are actively evolving:

LangChain’s Roadmap

- Expanding integrations (already 700+)

- Simplifying common patterns

- Focusing on ease of use

- Remaining the best way to prototype quickly

LangGraph’s Roadmap

- Enhanced production monitoring

- Better visual design tools (LangGraph Studio)

- Advanced multi-agent features

- Improved debugging capabilities

Recent LangGraph Updates (2024-2025):

- Cross-thread memory support

- Semantic search for long-term memory

- Tools can directly update graph state

- Human-in-the-loop improvements with

interruptfeature - Dynamic agent flows with

Commandtool - Integration with Model Context Protocol (MCP)

Complementary Tools in the Ecosystem:

LangSmith

Monitor, evaluate, and debug your applications

- Works with both LangChain and LangGraph

- Visual debugging and tracing

- Performance analytics

- Production monitoring

LangGraph Platform

Deploy and scale LangGraph applications

- Managed hosting service

- Horizontal scaling

- Built-in persistence

- Easy deployment

LangGraph Studio

Visual development environment

- Design workflows graphically

- Debug state transitions

- Test agents interactively

- Share and collaborate

Migration Path: From LangChain to LangGraph

If you’ve built something with LangChain and need LangGraph’s capabilities:

- Identify pain points: Where are you manually managing state or implementing workarounds?

- Map your workflow: Convert your chain into a graph with nodes and edges

- Define state schema: What information needs to persist?

- Reuse LangChain components: Your chains, tools, and prompts can become nodes

- Add graph features: Implement loops, conditional edges, and state management

- Test incrementally: Migrate one section at a time

Conclusion: Which Should You Choose?

The answer isn’t one-size-fits-all. Here’s the TL;DR:

Choose LangChain if:

- You’re building something simple and straightforward

- You need to prototype quickly

- Your workflow is predictable and linear

- You’re new to LLM development

Choose LangGraph if:

- You’re building complex, production-grade agents

- You need state management and persistence

- Your workflow has loops, branches, or conditional logic

- You’re coordinating multiple AI agents

- Reliability and monitoring are critical

Use Both when:

- Starting simple but expecting to scale

- Different parts of your system have different complexity levels

- You want LangChain’s ecosystem with LangGraph’s orchestration

Remember: LangGraph is not replacing LangChain—it’s extending it for more sophisticated use cases. As your AI applications grow in complexity, LangGraph provides the infrastructure to build reliable, maintainable, and scalable agent systems.

The future of AI development is agentic, and both LangChain and LangGraph are evolving to support that future. Choose the framework that matches your current needs, but don’t be afraid to evolve as your requirements grow.

Ready to get started? Here are a few resources for your reference on these topics:

What’s your experience with LangChain and LangGraph? Are you building agents for production? Share your thoughts in the comments!